AI Demonstrators of the CAI

The Centre for Artificial Intelligence (CAI) conducts research and educates in the field of artificial intelligence. In line with our mission, the CAI develops AI demonstrators that serve as valuable tools for students and the general public to gain profound insights into the world of artificial intelligence and explore its vast potential. These demonstrators not only showcase the capabilities of artificial intelligence but also shed light on its possibilities and limitations. This webpage provides a overview of the developed demonstrators as part of the CAI’s efforts in teaching and research.

Should you have any inquiries or wish to arrange an AI demonstration for your event, please do not hesitate to contact Pascal Sager, Head of AI Demonstrators.

Upcoming Events

(Updated 2023-05-17)

17 May, 2023: Annual Congress of the Swiss Academy of Technical Sciences SATW

3 June, 2023: Open Day at the Mechatronik Schule Winterthur MSW

6 June, 2023: Digital Day at Roche Diagnostics

7 June, 2023: Green Bytes Event organized by DIZH

12 June, 2023: IT Fire

7 July, 2023: Night of Technology at ZHAW

Unitree A1 Robodog

The Unitree A1 Robodog is an advanced quadruped robot capable of executing dynamic locomotion, including walking, running, and performing various predefined maneuvers. Its functionalities are complemented by a sophisticated sensory system comprising a camera and a LIDAR sensor, enabling the robot to perceive and interpret its surroundings. At the core of the robot’s operation lies a highly capable on-board computer, powered by a ROS-based software stack and featuring a Jetson Nano processor. This powerful combination empowers the robot to execute complex on-board computations, particularly leveraging deep learning models for enhanced decision-making capabilities.

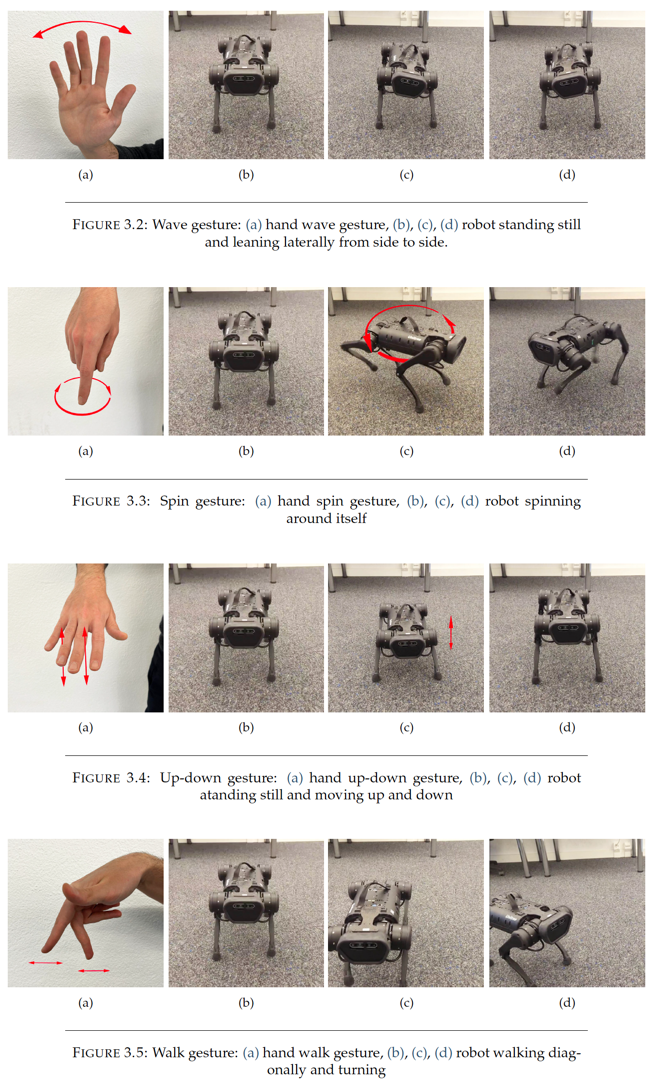

The integrated camera is used for the recognition of hand gestures. Employing advanced algorithms, the camera accurately identifies gestures, subsequently triggering corresponding actions by the robot. This novel interaction paradigm allows users to control the robot through a range of intuitive gestures:

Furthermore, the LIDAR sensor plays a critical role in environmental analysis and obstacle detection. Leveraging its sensing capabilities, the robot adeptly identifies potential obstacles within its surroundings, thus ensuring collision avoidance during its locomotive activities, be it walking or running.

To facilitate audience engagement and comprehension, both the camera feed and LIDAR output are streamed to an external monitor. This visual representation enables spectators to observe the robot’s perspective and comprehend the decision-making processes underlying its actions.

EEG - Control a Video Game with Thoughts

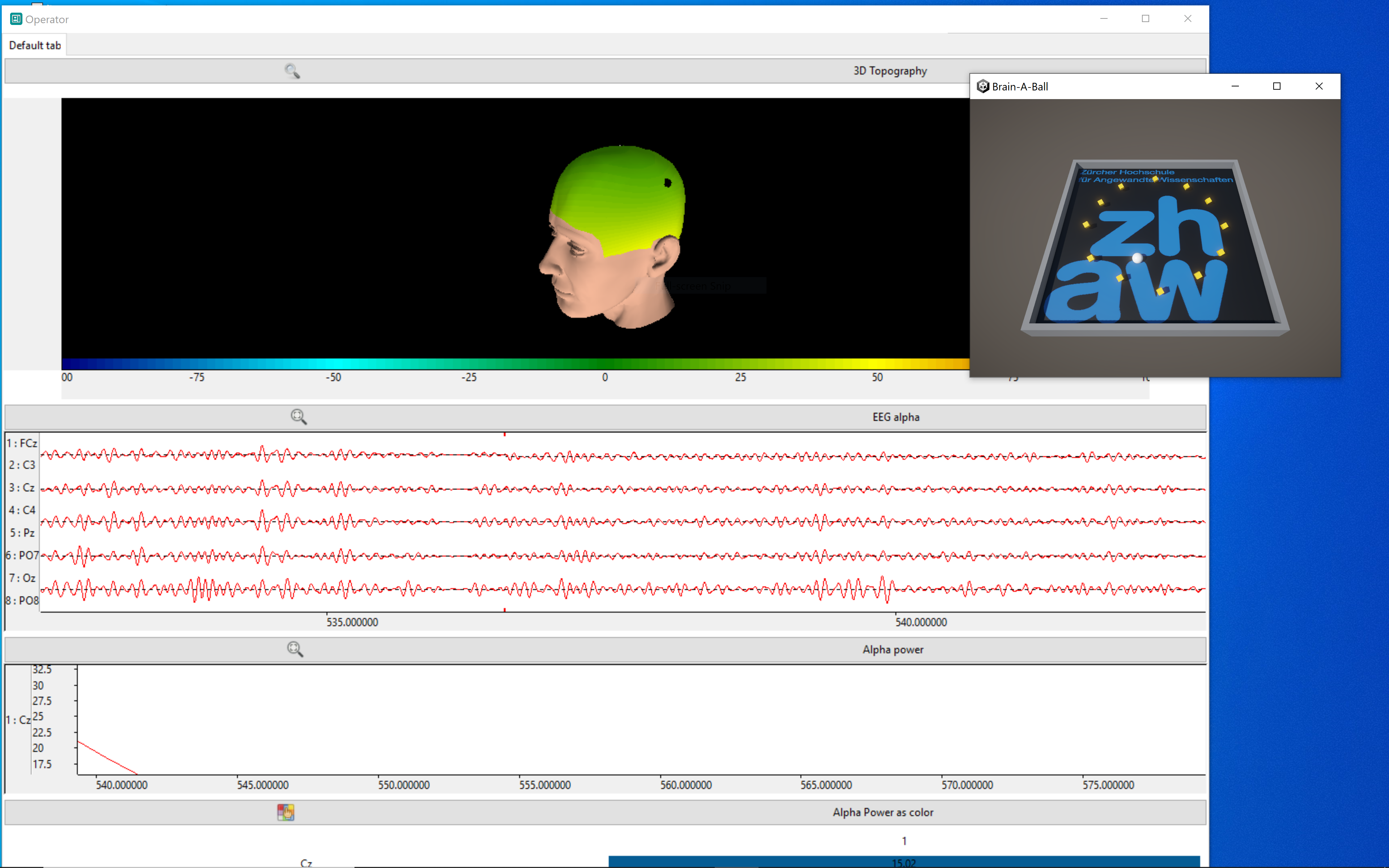

The EEG demonstrator enables users to control a video game using their thoughts. By wearing an EEG headset, the user’s brain activity is measured and sent to a computer for analysis. The computer then translates the data into commands for the game.

In the game, the user controls a ball that moves in a circular path. The size of the circle depends on the user’s level of relaxation or focus. If the user is relaxed, the circle becomes smaller, and if they are more focused, the circle becomes bigger.

The goal of the game is to guide the ball to collect all the yellow stones.

To explain the underlying technology, the demonstrator also features a visualization of the user’s brain activity. The visualization shows the user’s level of relaxation and focus over time.

Also see: www.srf.ch (23:17)

Swiss German to Image (Generative AI)

📺 Video presentation of the project [EN] https://youtu.be/SVxiDAktYoc

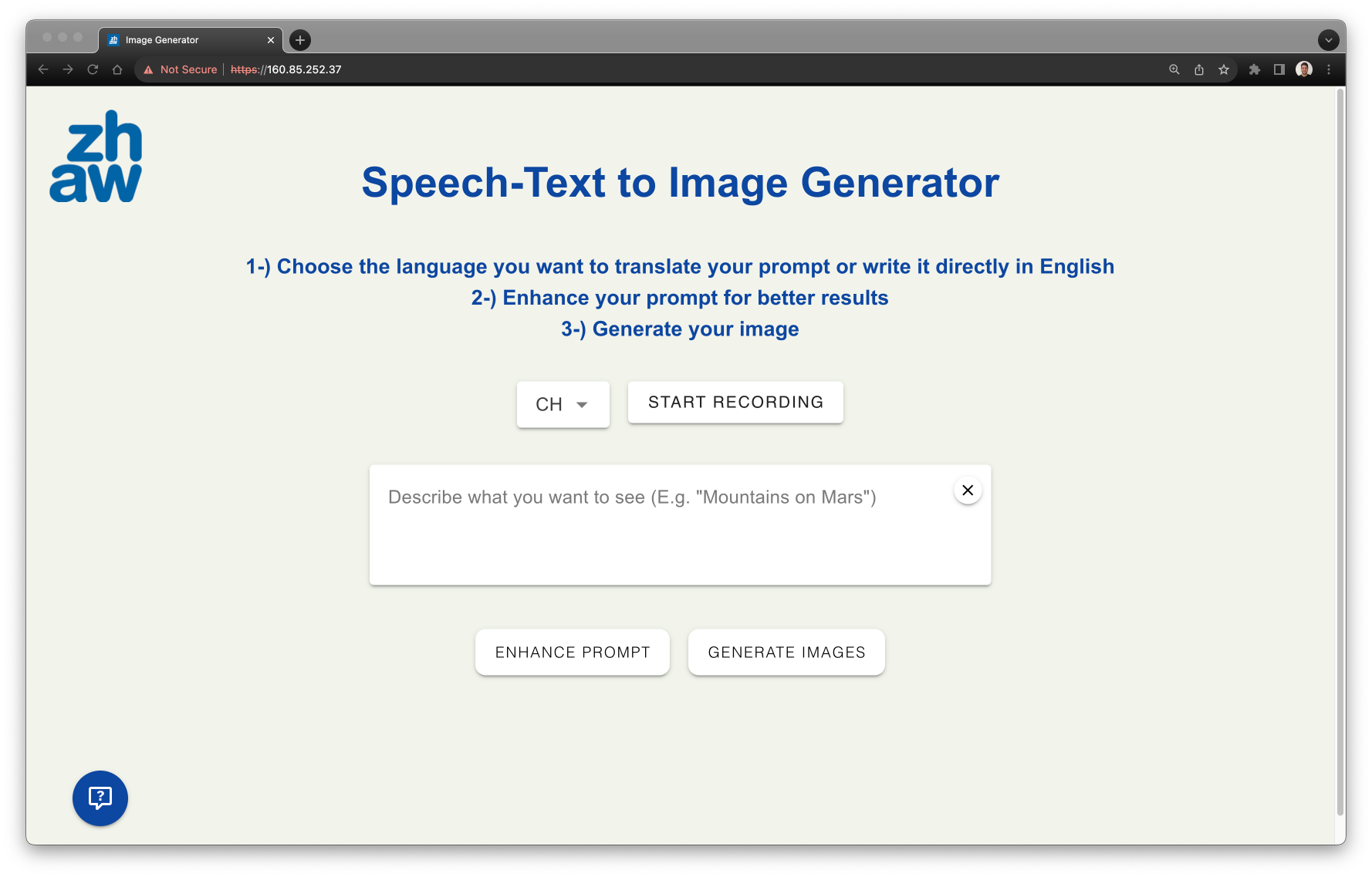

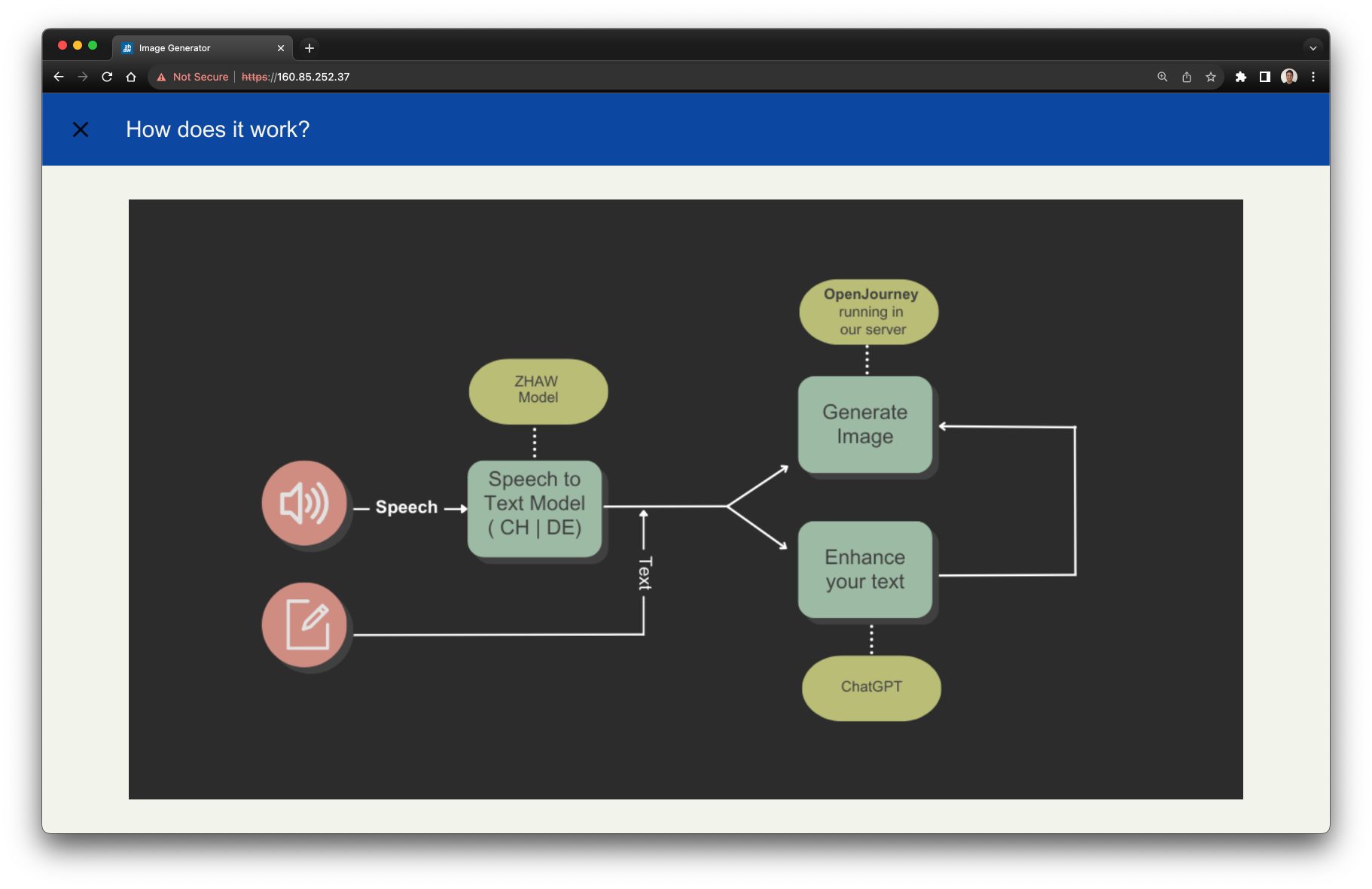

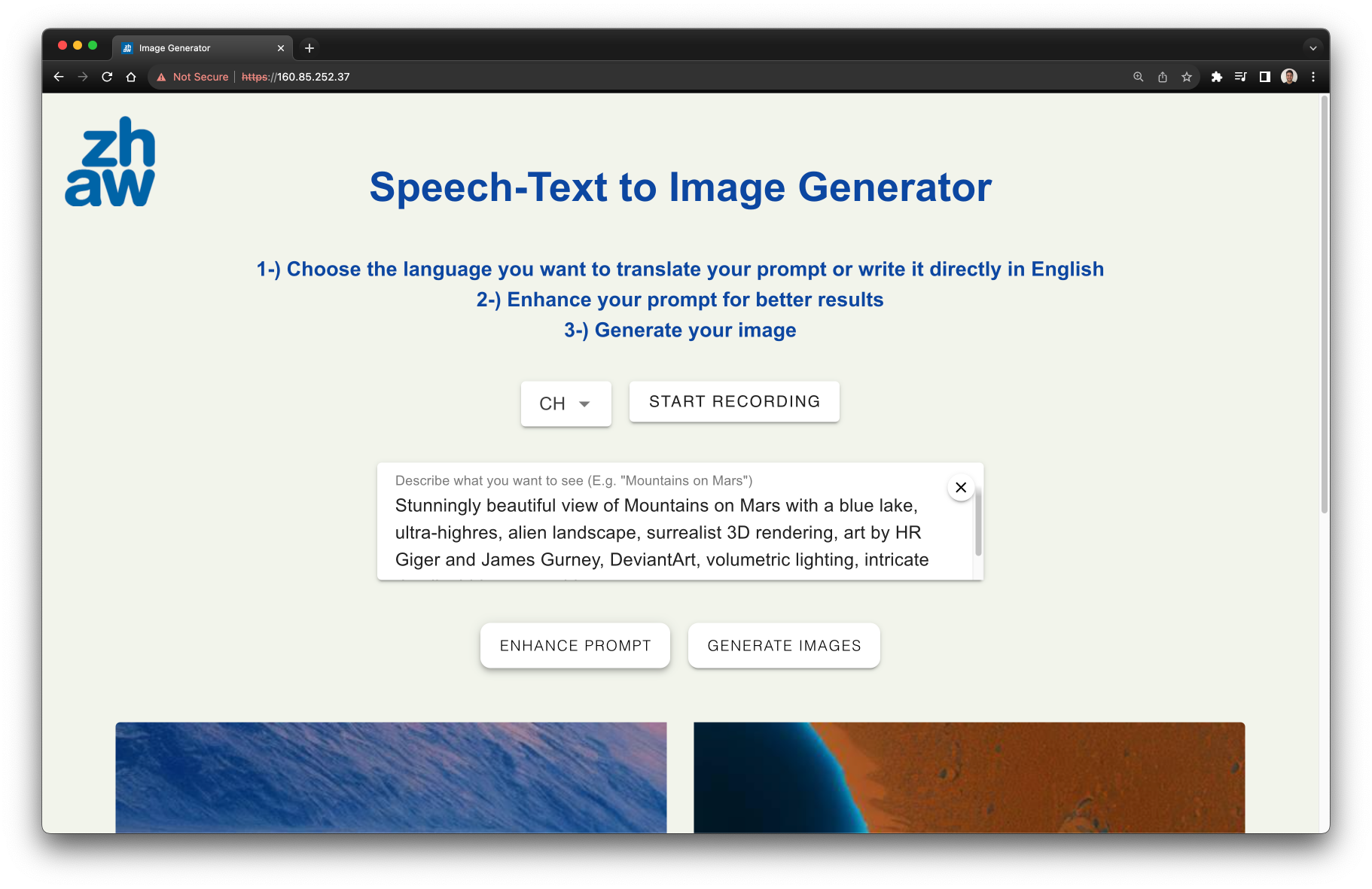

The goal of the project is to make possible direct speech-to-text-to-image generation. With one click and a prompt, you can generate two completely new images. To do that three distinct machine learning models were used: First, you can speak what you want to see in Swiss-German or German and the translation model will translate it to English (the Swiss-German to English model was developed by ZHAW). You can also write your prompt directly into English. With the prompt ready you can enhance it using the second model, chatGPT, which serves as a prompt generator to create new prompts for the third model, the image generator, responsible for the image generation.

After typing the prompt “Mountains on Mars with a blue lake” and clicking on the button “Generate Image” you can see the denoising process happening and the image being generated.

The image generator model used is Openjourney, an open source, custom text-to-image model that generates AI art images. Explaining a little bit about diffusion models, they are generative models, meaning that they are used to generate data similar to the data on which they are trained. Fundamentally, Diffusion Models work by polluting data through the successive addition of Gaussian noise, and then learning to recover the data by reversing this noising process. After training, we can use the Diffusion Model to generate data by simply passing randomly sampled noise through the learned denoising process.

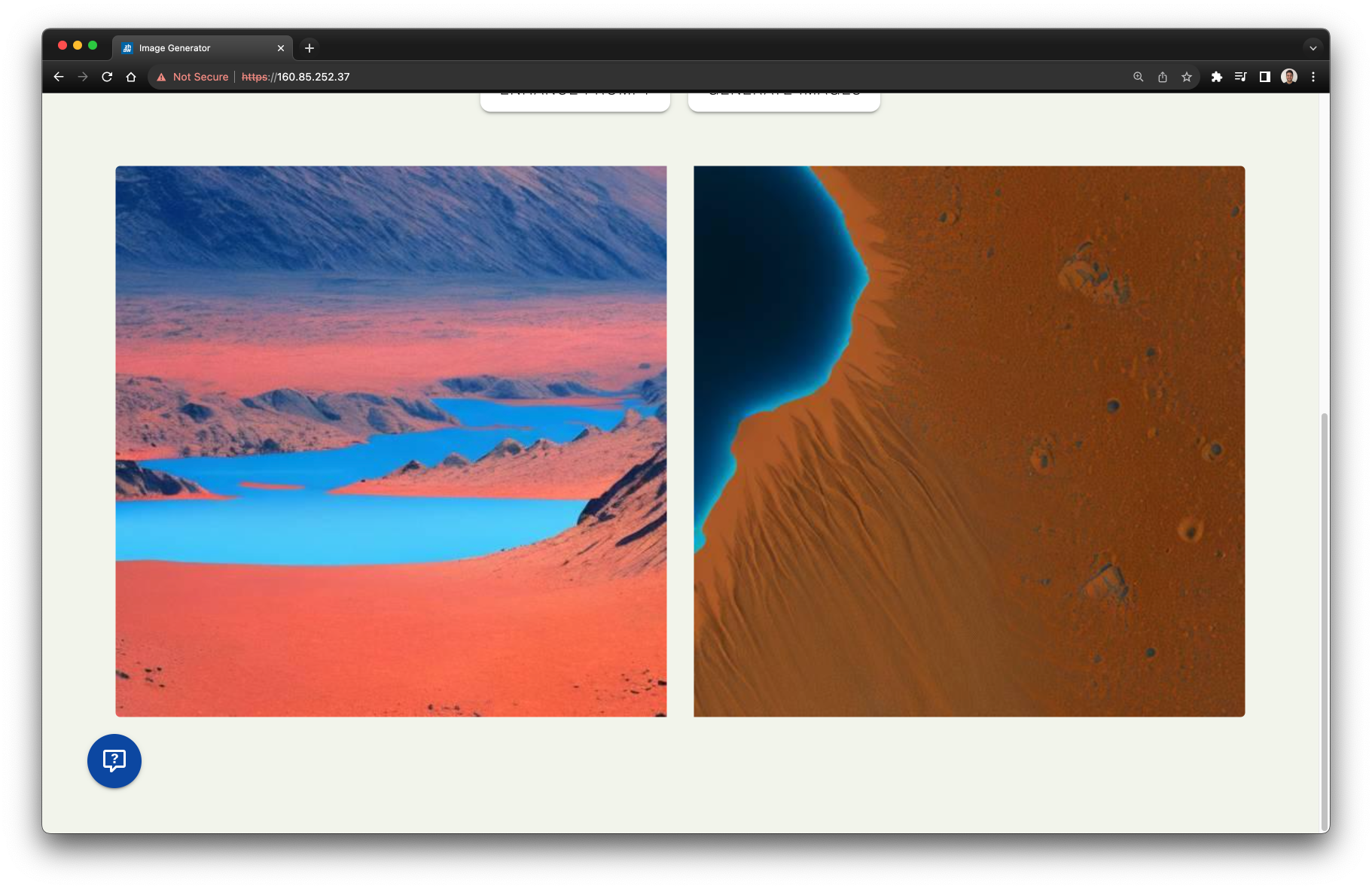

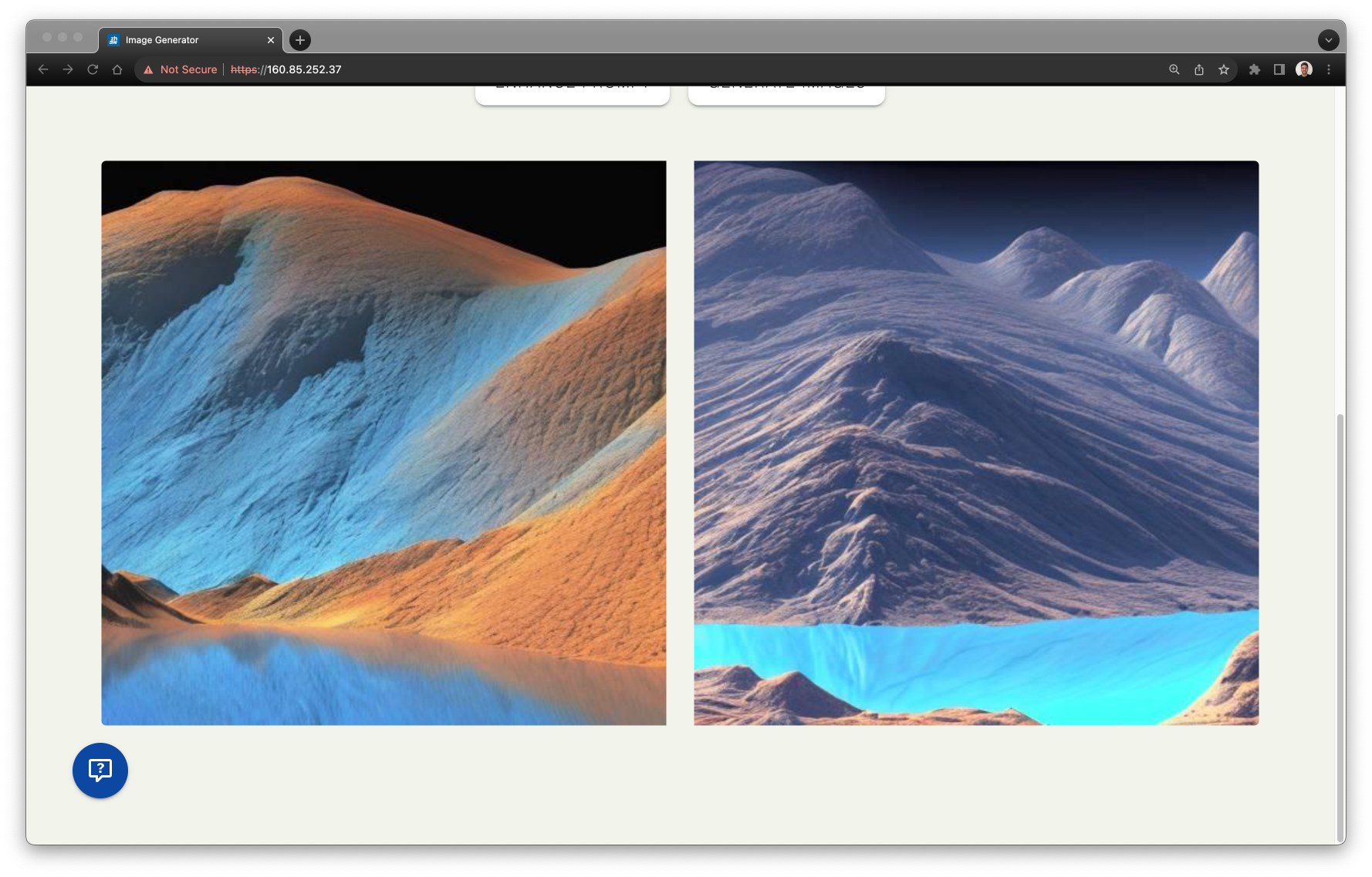

The generated imaged:

Using the ChatGPT to improve the quality and the level of specifications of a prompt sent to the image generation model. To generate a good image you most know a lot of parameters that will increase the level of detail of the image. ChatGPT was prompt engineered to enhance a simple prompt, enhancing the previous prompt we have “Stunningly beautiful view of Mountains on Mars with a blue lake, ultra-highres, alien landscape, surrealist 3D rendering, art by HR Giger and James Gurney, DenviantArt, volumetric lighting, intricate, warm and saturated colors.”

And generating the image again with the new prompt we have those two images:

Tabu Grandmaster

More information coming soon.